What Is a Web Crawler in a Website and How Does It Differ From a Search API?

TLDR: Web crawling is how software discovers and collects content from websites by following links from page to page. Building your own crawler makes sense when you need data from a small set of specific sources. For broad web coverage, search APIs provide access to pre-crawled data without the infrastructure burden.

A crawler visits a webpage, downloads its content, finds all the links on that page, then visits those linked pages and repeats the process. This is how search engines like Google build their indexes, and it's increasingly how AI applications get access to current web information.

Think about what happens when you ask an AI assistant about yesterday's news. AI models are trained on data collected months or years ago, so they may not be able to answer questions about recent events. Crawlers bridge this gap by continuously collecting fresh content from across the web, and search APIs make that crawled data accessible to developers building AI applications, chatbots, and research tools. Some search APIs go even further, indexing content within minutes of publication rather than the hourly or daily crawl cycles of traditional providers.

What Is a Web Crawler?

A web crawler, sometimes called a spider or bot, is software that systematically browses websites to collect their content. Google's crawler, called Googlebot, is the most famous example. It continuously visits billions of web pages to keep Google's search index current.

Every crawler follows the same basic pattern. It starts with a list of URLs to visit (engineers call these "seed URLs," the starting points that the crawler will branch out from). The crawler downloads each page, pulls out whatever text and data it needs, then looks for every link on that page. Each of those links becomes another page to visit. Repeat a few billion times and you've got Google's index.

At a small scale, this process is straightforward. A developer can write a basic crawler in a few hours using open-source libraries that handle the mechanics of downloading pages and extracting links. The complexity emerges when you need to crawl thousands or millions of pages reliably.

How Crawlers Differ from Scrapers and Search APIs

Developers often use these terms interchangeably, but they're actually different tools for different jobs.

| Tool | What It Does | Best For | You Manage Infrastructure? |

|---|---|---|---|

| Web Crawler |

Discovers pages by following links across sites | Finding new content, mapping websites | Yes |

| Web Scraper |

Extracts specific data from known pages | Pulling structured data from specific sources | Yes |

| Search API |

Queries pre-indexed web data via API calls | Broad web coverage without infrastructure | No |

Web crawlers explore and map websites, following links to find new content as it gets published. If you're asking "what pages exist out there?", you need a crawler.

Web scrapers, in contrast, extract specific data from pages you already know about. A scraper might pull product prices from an e-commerce site or grab article text from a news page. You point it at specific URLs rather than letting it wander.

Search API providers like You.com already operate crawling systems that continuously index the web, letting you query that data directly.

Many developers use these tools in combination. A team might use a search API for general web data, then build a custom scraper for a specific data source that requires special handling, like extracting structured data from PDF reports or accessing a site that requires authentication. And when you need deep coverage of an entire industry without the crawler maintenance headache, that's where vertical indexes come in.

Vertical Indexes: A Middle Path

Between broad search APIs and custom crawlers, vertical domain indexes offer a third option. Providers build these by focusing crawling resources on specific industries rather than the entire web, extracting structured data that general crawlers miss: legal filings with case metadata, healthcare publications with clinical classifications, retail products with pricing history.

The difference from general search APIs is depth of extraction. A broad index might return a link to a court filing; a vertical legal index extracts the case number, parties, jurisdiction, and ruling. You get API convenience with domain-specific depth, without building and maintaining specialized crawlers yourself.

Building these indexes requires domain expertise. Providers curate source lists specific to each industry, then build custom extraction logic that understands document structure. A legal parser knows where to find case numbers and rulings, a healthcare parser recognizes clinical trial phases and outcomes, etc.

This specialized work is why vertical indexes come from providers rather than being something most teams build themselves.

Technical Challenges at Scale

Crawling a few hundred pages is a weekend project. Crawling millions of pages reliably? That's where things get complicated.

- Rate limiting and politeness. Websites don't appreciate getting hammered with crawl requests. Production crawlers must pause between requests to the same site, typically waiting 1-10 seconds between each page fetch. Crawling a single large website can take days or weeks.

- JavaScript-heavy sites. Modern websites often load content dynamically, meaning a basic crawler that just downloads HTML will miss most of the actual content. To see what a human visitor sees, crawlers need to run a headless browser, which is a full browser that operates without a visible window. Browser automation tools exist for this, but rendering full pages with JavaScript takes far longer than downloading raw HTML. Simple HTTP requests can fetch hundreds of pages per second, while headless browsers might manage only a handful in the same amount of time.

- Anti-bot defenses. Websites block crawlers because their data has commercial value: social platforms protect user data and ad revenue, e-commerce sites guard pricing from competitors, news outlets prevent republishing without traffic. These sites invest in detection through CAPTCHAs, rate limiting, and behavioral analysis that spots non-human patterns in mouse movements and request timing. Overcoming these defenses means rotating network locations, varying request patterns, and constantly adapting as sites update their countermeasures.

- Infrastructure failures. At scale, something is always breaking: network connections drop mid-download, servers return errors instead of pages, lookups fail when trying to convert website names into server addresses. Production crawlers need retry logic that handles these failures gracefully.

- Site changes. Websites redesign without warning. The code patterns your crawler used to find article text last week now return nothing because the site changed its page structure. Each change requires engineering time to diagnose and fix.

All of this explains why many teams skip building their own crawlers entirely. Search API providers operate at a scale that makes the investment worthwhile. They process billions of queries monthly, maintain infrastructure across data centers, and keep indexes fresh continuously. The cost gets spread across thousands of customers and you query pre-indexed data without managing any of the underlying complexity.

Let someone else deal with the infrastructure while you focus on what you're actually building.

How Web Crawlers Keep Data Current

A crawler that visits pages once misses everything published after that crawl. How often you need to revisit depends entirely on your use case.

Real-time crawling prioritizes speed above all else. News aggregators and financial data applications need articles or filings within minutes of publication, which means continuous infrastructure monitoring sources around the clock.

For teams building training datasets, batch crawling takes the opposite approach. Coverage matters more than freshness here. These systems might crawl billions of pages over weeks or months because the data doesn't need to be current. It needs to be complete.

Incremental updates split the difference. A crawler using this strategy checks news sites hourly, corporate blogs daily, documentation sites weekly. You match crawl frequency to how often content actually changes, balancing freshness against infrastructure costs.

Search APIs handle these freshness trade-offs so you don't have to. When you call the API, you're querying an index that's already been built and maintained, rather than managing crawl schedules yourself. You.com, for example, maintains a fresh news index that updates within minutes for breaking stories, while also providing access to the broader web index for less time-sensitive queries.

How AI Applications Access Web Data

The data needs vary wildly depending on what you're building. A chatbot has to handle literally any question a user might throw at it, which requires broad coverage across the entire web. A financial research tool needs something different: deep expertise on a narrow set of sources. Different problems call for different approaches.

Conversational AI and chatbots can't predict what users will ask. Breaking news, product specifications, company information, obscure technical documentation—any query is fair game. Search APIs fit this pattern because they provide access to billions of pre-indexed pages without requiring you to anticipate every possible topic.

Deep research tools flip this logic. A financial research agent might focus exclusively on SEC filings (the financial disclosures public companies must submit), earnings transcripts, and analyst reports. A legal tool needs deep coverage of case law and regulatory documents. These applications often combine search APIs for wide-ranging queries with custom crawlers for domain-specific depth, or use vertical indexes that provide industry-specific coverage without the infrastructure overhead.

E-commerce and competitive intelligence sit somewhere in between, needing both real-time pricing data and historical trends. Teams typically use search APIs to monitor the broader market while running custom scrapers to track specific competitor sites.

The pattern is pretty clear: use search APIs when you need breadth, build custom crawlers when you need precise control over specific sources, and consider vertical indexes when you need industry-specific depth without the infrastructure burden.

When to Build a Crawler vs. Use a Search API

So should you build your own crawler or just use an API? A few factors usually decide it.

- Source count. Custom crawlers make sense for 50-100 well-defined sources where you need specialized extraction logic. Thousands or millions of domains? Search APIs are more practical.

- Extraction complexity. Parsing specific table formats from earnings reports, extracting data from PDFs, accessing authenticated sites: these require custom solutions. General web content and search results? APIs already handle that.

- Maintenance burden. Here's why this often seals the deal: custom crawlers can easily consume 10-20 hours weekly in fixes and workarounds as sites change, defenses evolve, and infrastructure needs monitoring. Search API providers absorb this burden as part of their service.

- Freshness requirements. If you need content within minutes of publication, confirm that your chosen approach can deliver for your specific sources.

- Compliance. Some organizations need full control over data collection and storage for regulatory reasons, which is where custom crawlers have an advantage. But, if you go the API route, you're not without options. Look for providers with SOC 2 Type II certification, which covers operational security controls, and the option for zero data retention policies that ensure your queries aren't stored or used for model training.

The practical path for most teams: start with an API, then layer in custom solutions only where the data requirements justify ongoing maintenance.

Start Building with Real-Time Web Data

Web crawling powers everything from search engines to AI assistants, but building and maintaining crawler infrastructure means ongoing engineering work that most teams would rather avoid. For most applications, search APIs offer a faster path to production-ready web data access.

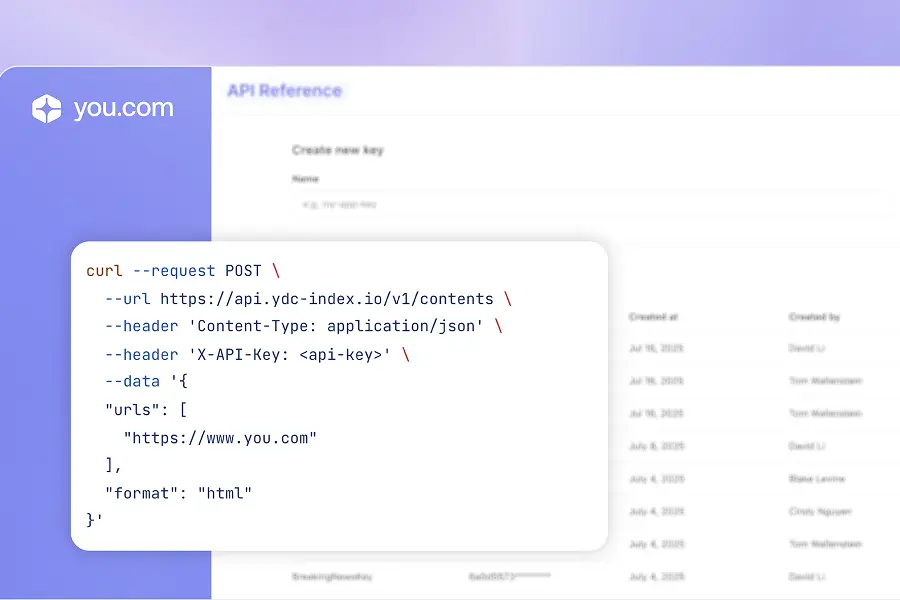

You.com provides a Search API designed for AI applications that need real-time web data. The API returns structured search results with source citations, and the platform operates with zero data retention. Queries aren't stored or used for model training.

Frequently Asked Questions

What types of websites are hardest to crawl?

Social media platforms and large e-commerce sites top the list, but not just for technical reasons. These companies have strong business incentives to block crawlers: social platforms protect user data and advertising revenue, while e-commerce sites guard pricing information from competitors. They employ dedicated teams to detect and block automated access, and they update their defenses constantly. Smaller sites might have basic rate limiting, but major platforms treat anti-bot measures as a core business investment.

How do search APIs handle sites that block crawlers?

Scale. Search API providers operate crawling infrastructure that processes billions of queries, which lets them invest in access techniques individual teams simply can't afford—rotating through huge pools of network addresses across different regions, varying browser characteristics to look like regular users, constantly adapting to new anti-bot measures. That operational expertise is a big part of what you're paying for when you use a search API.

How much does it cost to build and maintain a custom web crawler?

It depends entirely on scale. A basic crawler for a few dozen sites? Maybe a few days of development plus occasional fixes when sites change. But crawling at web scale is a different story. You're looking at distributed infrastructure across multiple regions, dedicated engineering time, and constant adaptation to anti-bot defenses. For most teams, search APIs like the You.com Search API end up being more cost-effective because you're paying per call instead of maintaining all that infrastructure yourself.

How often should a crawler revisit pages to keep data fresh?

Start by observing the source. Check timestamps on recent articles or look at archive pages to see publishing patterns. Most sites are predictable once you watch them for a week or two. The smarter approach is building in adaptive logic—track when your crawler actually finds new content versus returning unchanged pages. If you're hitting a site hourly but only finding updates once a day, you're wasting resources. Some teams set up lightweight "change detection" checks that run frequently but only trigger full crawls when something new appears.

Featured resources.

.webp)

Paying 10x More After Google’s num=100 Change? Migrate to You.com in Under 10 Minutes

September 18, 2025

Blog

September 2025 API Roundup: Introducing Express & Contents APIs

September 16, 2025

Blog

You.com vs. Microsoft Copilot: How They Compare for Enterprise Teams

September 10, 2025

Blog

All resources.

Browse our complete collection of tools, guides, and expert insights — helping your team turn AI into ROI.

.png)

Effective AI Skills Are Like Seeds

Edward Irby, Senior Software Engineer

March 2, 2026

Blog

AI Hallucination Prevention and How RAG Helps

Megna Anand, AI Engineer, Enterprise Solutions

February 27, 2026

Blog

.png)

Introducing the You.com Research API—#1 on DeepSearchQA

You.com AI Team, Data Scientists, Product Managers, and More

February 26, 2026

Blog

Why Agent Skills Matter for Your Organization

Edward Irby, Senior Software Engineer

February 26, 2026

Blog

P99 Latency Explained: Why It Matters & How to Improve It

Zairah Mustahsan, Staff Data Scientist

February 25, 2026

Blog

.png)

How to Add AI Web Search to n8n

Tyler Eastman, Lead Android Developer

February 24, 2026

Blog

Give Your Discord Bot Real-Time Web Intelligence with OpenClaw and You.com

Manish Tyagi, Community Growth and Programs Manager

February 20, 2026

Blog

Semantic Chunking: A Developer's Guide to Smarter RAG Data

Megna Anand, AI Engineer, Enterprise Solutions

February 19, 2026

Blog