AI search engines, unlike traditional search engines, interpret natural language queries, reason across multiple sources, and return synthesized answers with citations instead of presenting lists of blue links.

This represents a fundamental shift in how search works. When you ask a question today, instead of pages of links, AI search engines reason over multiple sources and return a concise, relevant, accurate answer, often in seconds.

This guide explores how AI search works and covers real-world use cases.

What is AI Search?

AI search is a technology that uses large language models (LLMs) and machine learning to understand natural language queries, retrieve relevant information from multiple sources, and generate direct answers with citations.

This technology allows users to save hours on research by reading available data, reasoning over findings, and delivering direct answers with source citations instead of requiring users to manually synthesize across dozens of links.

AI search platforms typically include these capabilities:

- Conversational interfaces that remember context across follow-up questions

- Semantic understanding that parses meaning and intent rather than matching keywords

- Multi-step reasoning that breaks complex queries into sub-tasks and synthesizes findings

- Live citations that link every claim back to original sources for verification

These capabilities, in turn, are driven by three technical components:

- Natural language processing translates requests into machine-readable intent

- Machine learning ranks and personalizes results based on interaction patterns

- Vectors and transformers work together to process language. Vectors are numerical representations of text that capture semantic meaning, while transformers are neural network models that convert text into these vectors and analyze relationships between words.

These components come together in Retrieval-Augmented Generation (RAG), the architecture that powers most AI search systems.

What is RAG?

RAG works in two steps: first, it retrieves relevant documents from a search index, then generates an answer by feeding those documents to an LLM. This prevents hallucinations by grounding responses in actual sources rather than relying solely on the model's training data.

Here’s how all this comes together in practice. Consider building a coding assistant application where users ask "how do I authenticate API requests using OAuth 2.0 in Python?" Without AI search, the application would return ranked documentation links, forcing users to click through Stack Overflow threads, official docs, and tutorials to find answers.

With AI search integrated through a search API, the application reads documentation across multiple sources, extracts the relevant authentication flow, and generates a working code example with citations, all within the application interface.

This shifts applications from "link aggregators" to "answer engines." Products that integrate AI search APIs deliver synthesized responses instead of requiring users to leave the application to research answers themselves.

AI Search vs. Traditional Search

Traditional search matches keywords and ranks pages, while AI search interprets intent, reasons over data, and synthesizes responses with inline citations.

The contrast comes into focus across six core areas:

Comparison Table

| Dimension |

Traditional Search |

AI Search |

| Query understanding |

Matches keywords |

Infers context and intent |

| Ranking logic |

Static signals (links, keywords) |

Semantic analysis with vectors and transformers |

| Answer format |

List of URLs |

Direct answer plus inline sources |

| Personalization |

Limited (history, location) |

Session-aware, behavior-driven |

| Speed to insight |

Moderate (click and read) |

Rapid (answer rendered in one step) |

| Typical challenges |

Misses nuance, long-tail limits |

Higher compute cost, risk of hallucination |

AI search can be integrated directly into products through APIs. For example, DuckDuckGo uses You.com's Web Search API to power DuckDuckGo AI Chat, while Windsurf uses the API to enable their coding agents to access code repositories and developer docs on the web.

How AI Search Is Used Today

AI search is used across three main categories: consumer applications that serve end users directly, enterprise workflows that improve internal operations, and developer tooling that provides programmable access to search capabilities. Each category demonstrates different implementation approaches and use cases:

Consumer Applications

Consumer applications demonstrate how AI search adapts to different input types. Hundreds of millions of people use voice assistants daily, asking questions out loud and receiving spoken answers without touching their devices. Visual search takes this further: tools like Google Lens let users point their camera at a product to instantly find purchase links and reviews. For text-based shopping, recommendation engines now understand intent rather than just matching keywords, helping people discover products they're actually looking for rather than products that happen to contain the right search terms.

Enterprise Workflows

Knowledge-management systems use vector search to scan contracts, slide decks, and chat logs, then surface the most relevant passage instead of filename lists. Customer-support teams embed this technology in chatbots, letting users resolve issues without queues. Retrieval-Augmented Generation pulls live sales numbers, blends them with historical data, and generates briefings in seconds.

Developer Tooling

Developer tooling usually integrates AI search APIs that provide citation-backed responses. Platforms use this approach to improve discoverability across machine-learning models, datasets, and demos, helping users locate assets by function rather than filename.

Where Does the Data for AI Search Come From?

AI search answers draw from data pipelines in five stages: content gathering, indexing, vectorization, retrieval, and synthesis through RAG.

Content Gathering

Continuous web crawling pulls new, public-facing pages while distributed connectors stream documents from private stores like SharePoint or Databricks. Traditional engines refresh on crawl schedules, but modern AI search systems add event-driven listeners so breaking information gets indexed within seconds.

Indexing

Indexing processes raw text by splitting it into chunks and storing them in a distributed database for fast passage lookup. Some models integrate real-time data sources for fresher information, not limited to their last crawl.

Indexing processes raw text by splitting it into chunks and storing them in a distributed database for fast passage lookup. Some models integrate real-time data sources for fresher information, not limited to their last crawl.

Vectorization

Vectorization converts each chunk into high-dimensional vectors, numerical representations that capture meaning rather than keywords. If keywords are street names, vectors are GPS coordinates that locate semantically similar ideas even when words don't match.

Retrieval

During retrieval, the engine compares your question's vector with billions of stored vectors to pull relevant passages. A lightweight keyword filter often runs in parallel for both precision and recall. For example, if you search for "Python OAuth implementation," the vector search finds semantically related content about authentication, while the keyword filter ensures results actually mention Python rather than returning generic authentication guides for other languages.

RAG Synthesis

RAG hands those passages to a language model that synthesizes coherent answers with citations. This citation step prevents hallucinations by anchoring statements to source text.

Because each stage is modular, the system can tune freshness or factuality without overhauling the entire stack.

What Makes a Good AI Search API?

A good AI search API needs three qualities: reliable uptime so applications don't fail when users need answers, model flexibility to optimize for different use cases, and composable architecture that lets developers combine capabilities without managing multiple integrations.

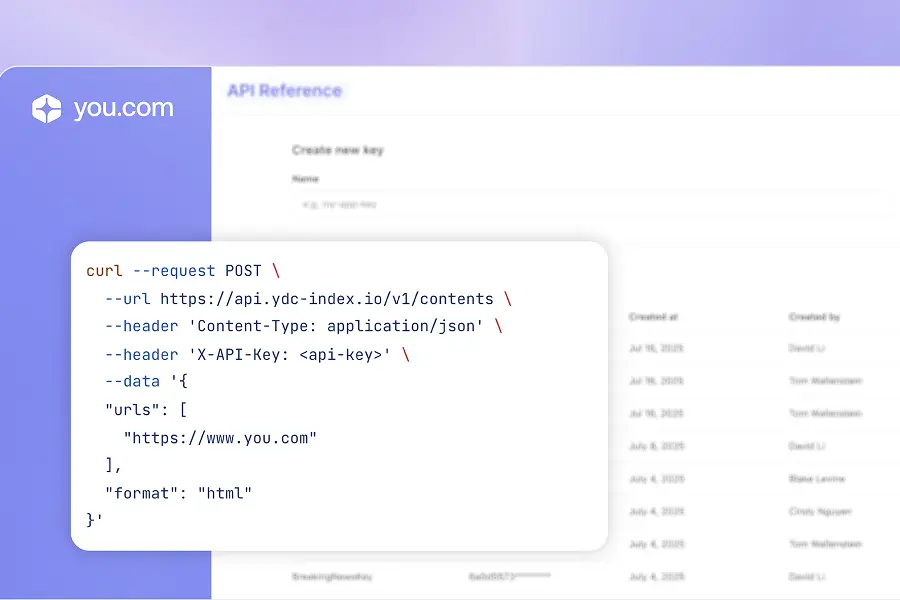

The You.com Web Search API provides this foundation for developers building AI applications. The system processes over one billion queries monthly with 99.9 percent uptime, delivering real-time web data with citations through a model-agnostic architecture.

Unlike rigid endpoints that lock you into specific models or data sources, You.com's composable approach lets developers route queries based on requirements. Need low latency for real-time user queries? Route to speed-optimized models. Processing batch research jobs where accuracy matters more than response time? Route to quality-optimized models. The same API handles both use cases without forcing architectural compromises.

This flexibility extends to data sources. The API provides access to real-time web search, fresh news indexes, and specialized vertical data for domains like legal, healthcare, and retail. Developers can combine these sources programmatically rather than managing separate integrations.

During You.com's 2025 Agentic Hackathon, developer Summer Chang built MINA, a startup research assistant, in just four hours using the Web Search API. The tool synthesizes startup trends, funding activity, and product launches from multiple sources into structured insights with verified citations, demonstrating how developers can quickly build domain-specific intelligence tools on top of reliable search infrastructure.

Start Building with AI Search APIs

As AI search adoption accelerates, choosing a reliable AI search infrastructure becomes critical. Most developers currently run three or four search APIs simultaneously because no single provider offers reliable uptime and model flexibility.

The You.com Web Search API offers developers enterprise-grade search infrastructure with 99.9% uptime, processing over one billion queries monthly. The composable, model-agnostic architecture gives you flexibility to optimize for speed, accuracy, or cost based on your specific use cases. One API, predictable performance, more time to focus on what makes your product unique.

Start testing citation-backed search in applications today.

.webp)